Bouncing bomb card game design workflow

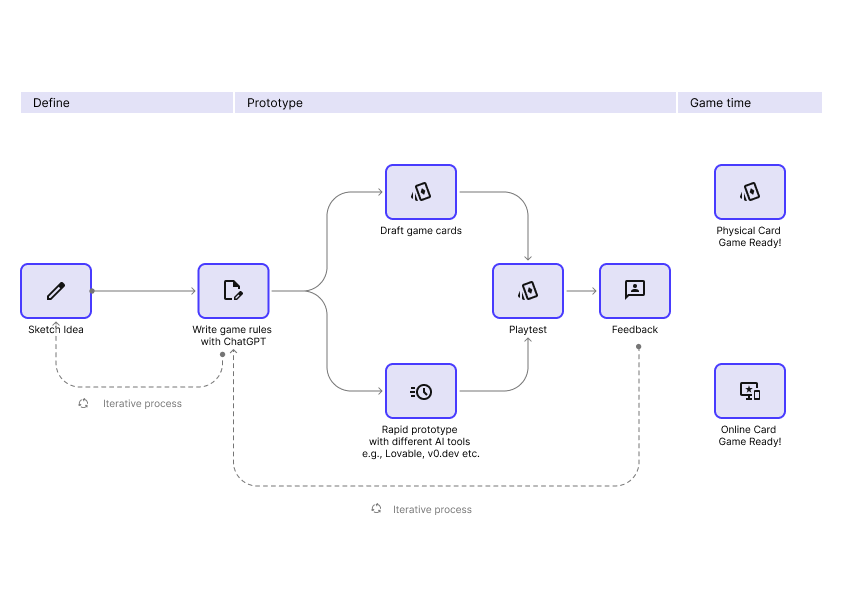

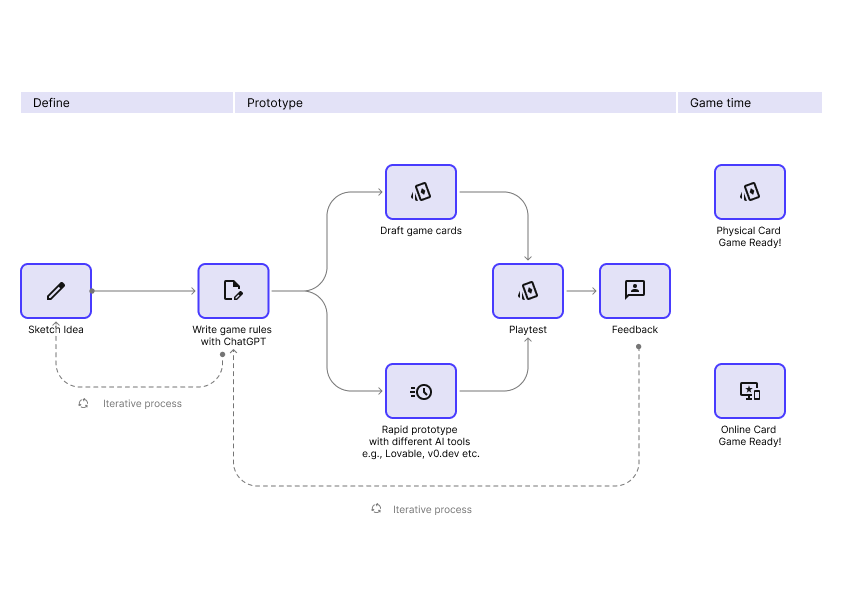

I mapped out a high-level workflow to track how ideas moved from sketch → AI generation → digital prototype → physical cards → playtesting.

Figure 1: Design workflow diagram

🔁 Hybrid Testing: AI-Informed, Human-Validated

As a board game enthusiast, I set out to explore how far AI tools like ChatGPT, Lovable, and Replit could take a simple card game idea, from scratch to a playable prototype.

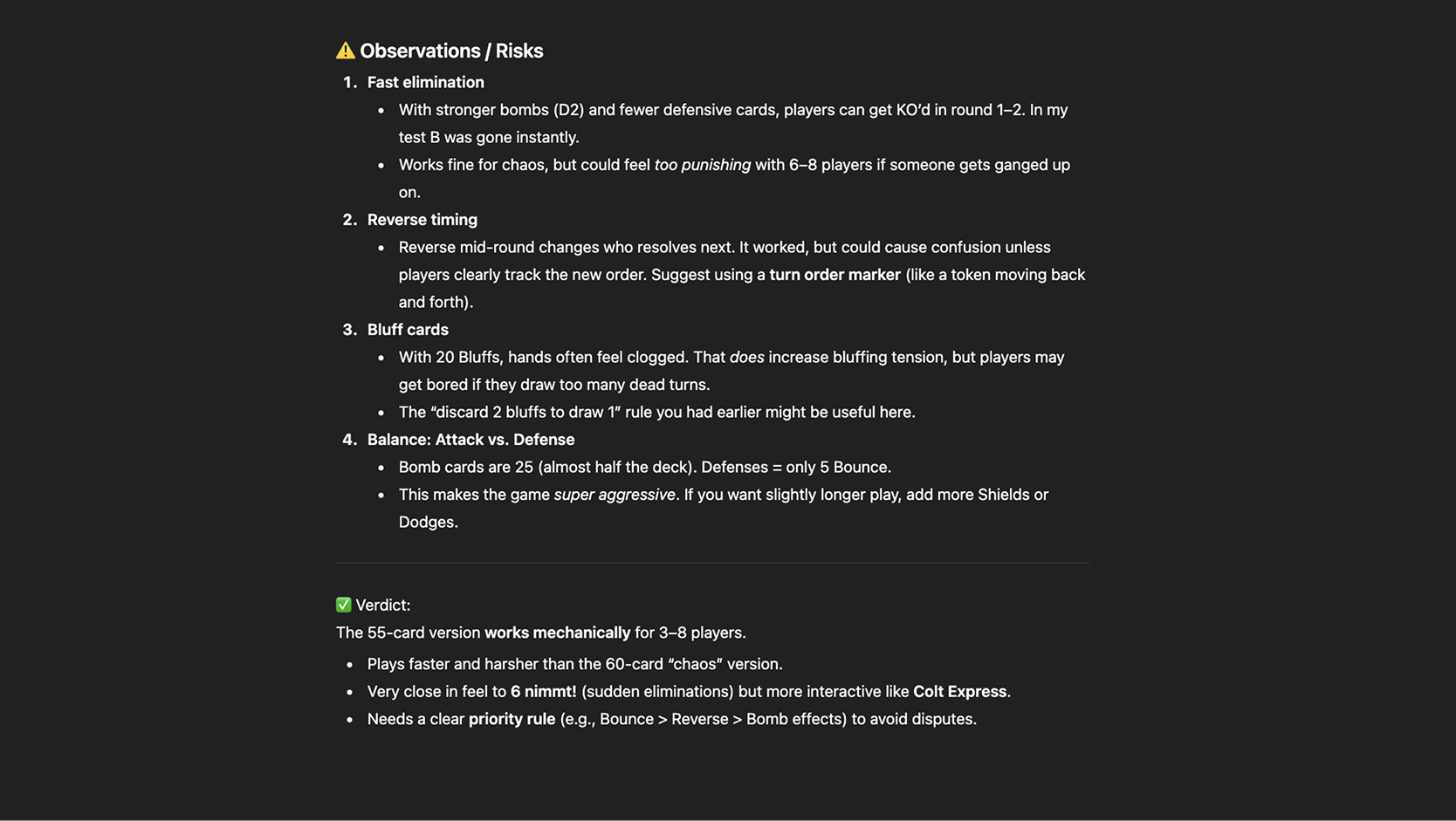

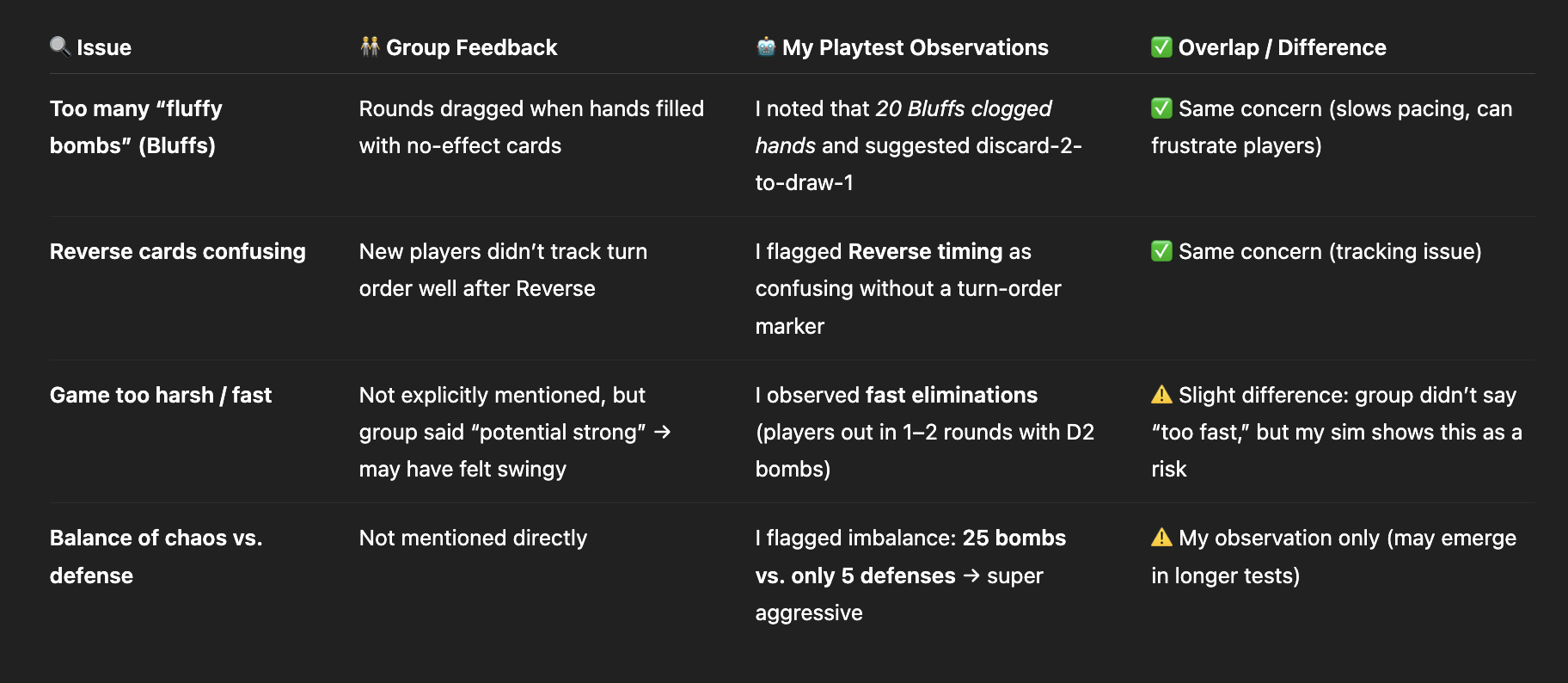

With these AI-generated game rules and card lists as a foundation, I ran targeted human playtesting sessions with my board game group. Feedback was immediate and emotional — players flagged pacing issues, confusion with reverse cards, and unclear win conditions, none of which AI alone had caught.

Outcome: A faster, hybrid testing workflow that cut iteration time and produced a more engaging, balanced game experience.

🧢 Role

Designer

🙌 Collaborator

Board Game Friends

🗓️ Date

2025

I mapped out a high-level workflow to track how ideas moved from sketch → AI generation → digital prototype → physical cards → playtesting.

Figure 1: Design workflow diagram

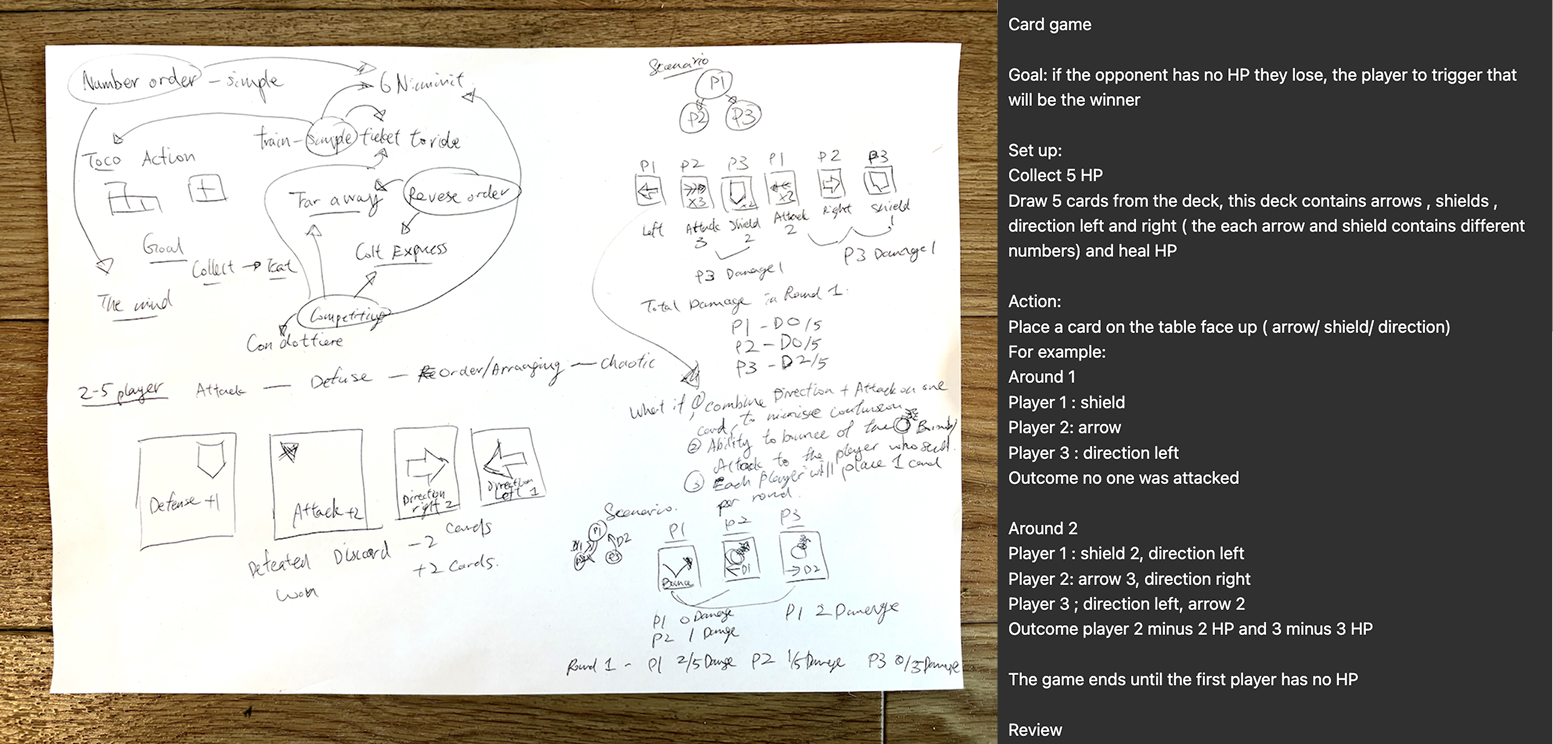

To kick things off, I sketched the core mechanics of how the game might play. These rough ideas became the starting point for AI-powered iteration.

Figure 2: Sketch and initial prompt

Using ChatGPT, I ran through multiple iterations to refine the game loop, number of cards for 3–8 players, and balance the mechanics. This resulted in Version 1 of the rules.

Figure 3: Version 1 rule

Figure 4: ChatGPT testplay result

Figure 5: ChatGPT testplay feedback

I translated the rules into digital prototypes using AI-driven platforms like Replit and Lovable. Replit came closest to what I envisioned, enabling me to playtest online.

I designed simple card layouts in Figma, printed them, and created a physical deck for in-person playtesting.

Figure 6: Version 1 physical card design

Early feedback from my board game group:

💣 Too many “fluffy bomb” cards caused rounds to drag

💣 Reverse cards confused new players

💣 Overall potential felt strong, but tweaks were needed before the next round of testing

Figure 7: Version 1 testplay session

Figure 8: Comparing testplay feedback between real users and ChatGPT’s observations

My aim is to reduce confusion and streamline gameplay. I’ll create a Version 2 and conduct further testplay sessions to gather more feedback.

Instead of treating AI and human testing separately, I combined them in a feedback loop: player insights informed new AI prompts, which refined rules, rebalanced probabilities, and tweaked mechanics more efficiently. This hybrid process reduced iteration time while keeping the game fun, intuitive, and grounded in real-world interaction.